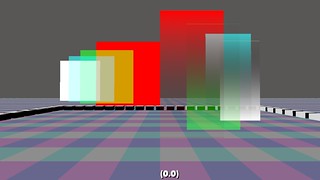

The red and white surfaces of the left are opaque, but you can see through them.

In order to address that, I changed the function to be simply 10 to the power of the negative distance to the surface, and multiplied by alpha like in the original paper. The function is configured with 2 parameters: the distance to the closest transparent surface, and the spacing between 2 transparent surfaces (s). Every (s) units you move away from the camera, the function gives the surface behind only 10% of the weight of what the surface in front has. The distance to the closest transparent surface is important because we are using 16-bit floats, and that means we run out of precision pretty quickly using this exponential function. So the weighting function is clamped and objects far away are all equally weighted.

Here's a video that shows the new function, plus a comparison with normal sorted transparency and with opaque surfaces for reference,

Notice that you can still see through the red surface, which is supposedly opaque, but the result is almost correct. In comparison, sorted transparency suffers from the usual popping artifacts, since the sorting is done per primitive, not per pixel. Primitives are either in front or behind one another, but we can't solve the generic problem without OIT. The result of rendering with the depth buffer, as if they were opaque, is to illustrate that a fully opaque pipeline has other issues like Z-fighting. The weight-blended OIT gives a consistent smooth result, free of popping and Z-fighting related artifacts.

As the original paper explains, it is useful using traditional alpha-blended transparency as reference, but that does not mean is more correct, since partial coverage is a complex phenomena and weight-blended OIT gives more plausible lighting results in many situations.

Tweet